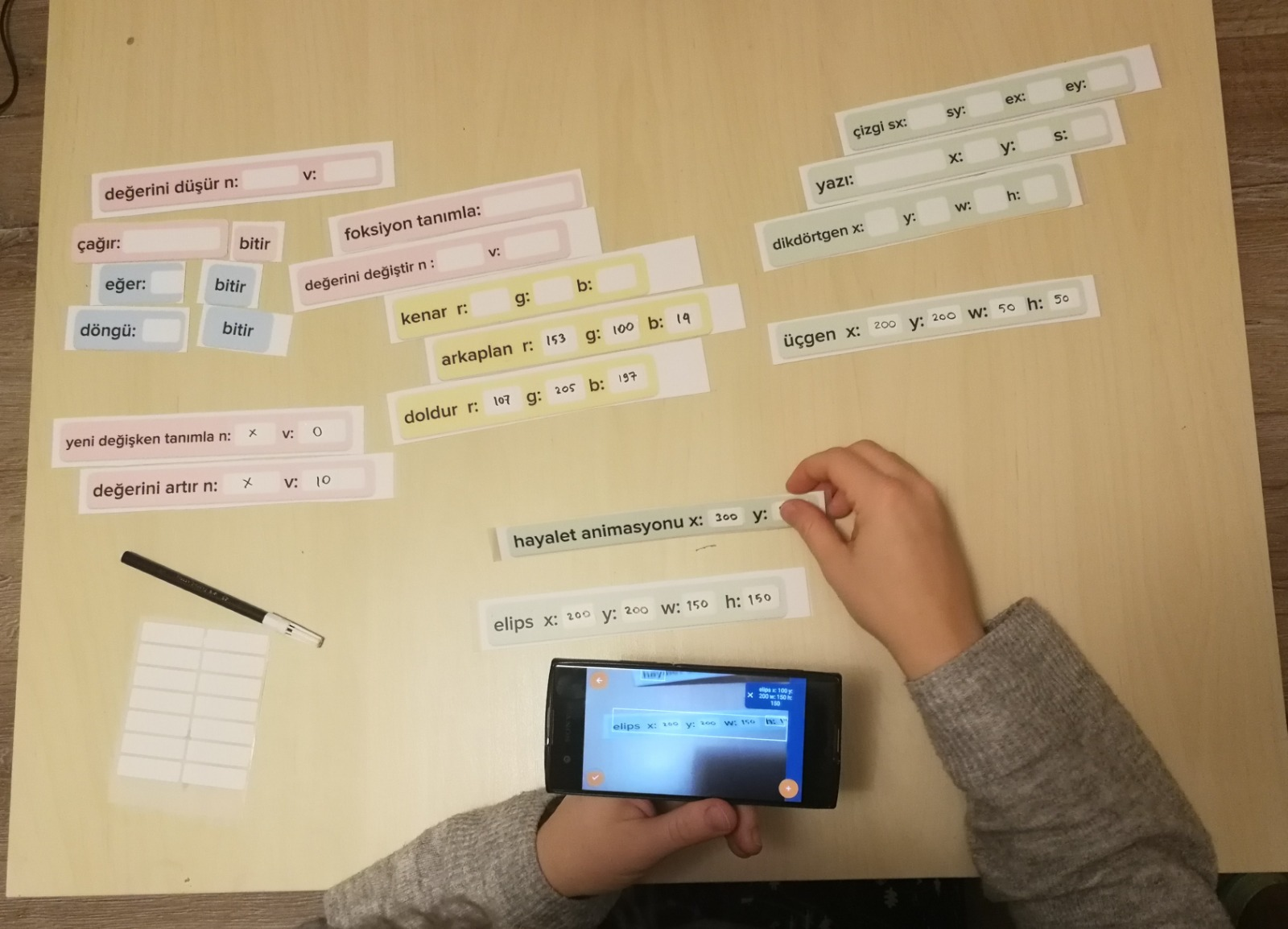

Kart-ON

Our TUBITAK-funded project Kart-ON programming environment is designed as an affordable means to increase collaboration among students and decrease dependency on screen-based interfaces. Kart-ON is a tangible programming language that uses everyday objects such as paper, pen, fabrics as programming objects and employs a mobile phone as the compiler.

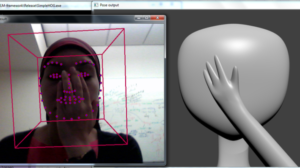

Generation of 3D Human Models and Animations

Using Simple Sketches

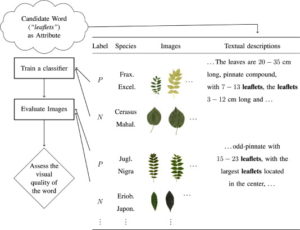

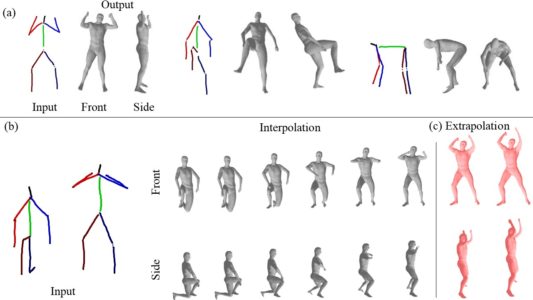

We exploit Variational Autoencoders to develop a novel framework capable of transitioning from a simple 2D stick figure sketch, to a corresponding 3D human model. Our network learns the mapping between the input sketch and the output 3D model.

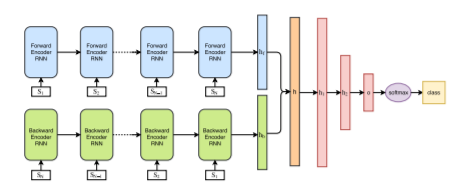

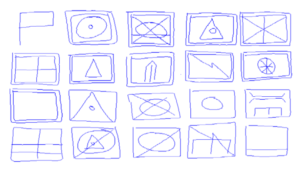

Deep Stroke-based Sketched Symbol Reconstruction and Segmentation

We propose a neural network model that segments symbols into stroke-level components. Our segmentation framework has two main elements: a fixed feature extractor and a Multilayer Perceptron (MLP) network that identifies a component based on the feature

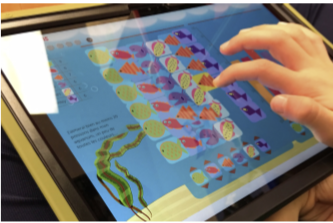

The ASC-Inclusion Perceptual Serious Gaming

Platform for Autistic Children

Often, individuals with an Autism Spectrum Condition (ASC) have difficulties in interpreting verbal and non-verbal communication cues during social interactions. We develop a platform for children who have an ASC to learn emotion expression and recognition, through play in the virtual world.

Generation of 3D Human Models and Animations

Using Simple Sketches

We demonstrate that our network can generate not only 3D models, but also 3D animations through interpolation and extrapolation in the learned embedding space. Extensive experiments show that our model learns to generate reasonable 3D models and animations

Gaze-based Predictive User Interfaces: Visualizing user intentions in the

presence of uncertainty

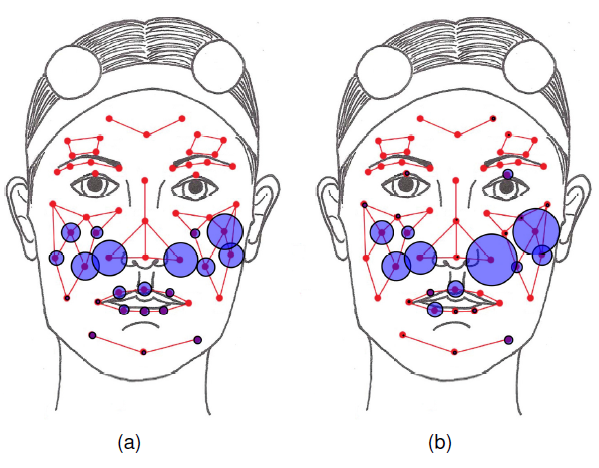

Systems are generally evaluated in terms of prediction accuracy, and on previously collected offline interaction data. Little attention has been paid to creating real-time interactive systems using eye gaze and evaluating them in online use. We have five main contributions that address this gap from a variety of aspects.

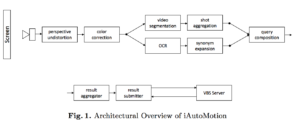

IMOTION – Searching for Video Sequences

Using Multi-Shot Sketch Queries

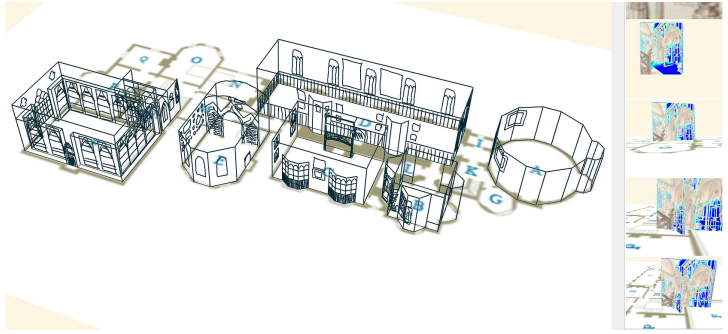

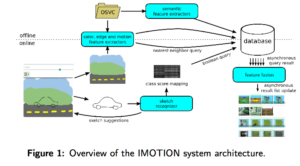

This paper presents the second version of the IMOTION

system, a sketch-based video retrieval engine supporting multiple query

paradigms. For the second version, the functionality and the usability of the system have been improved.

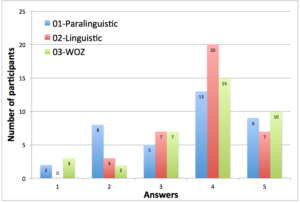

Multimodal Data Collection of Human-RobotHumorous Interactions in the JOKER Project

This paper presents a data collection of social interaction dialogs involving humor between a human participant and a robot. In this work, interaction scenarios have been designed in order to study social markers such as laughter.

Recent Developments and Results of ASC-Inclusion:

An Integrated Internet-Based Environment for Social

Inclusion of Children with Autism Spectrum Conditions

Individuals with Autism Spectrum Conditions (ASC) have marked difficulties using verbal and non-verbal communication for social interaction. The ASC-Inclusion project helps children with ASC by allowing them to learn how emotions can be expressed and recognised via playing games in a virtual world.

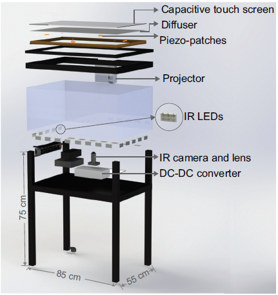

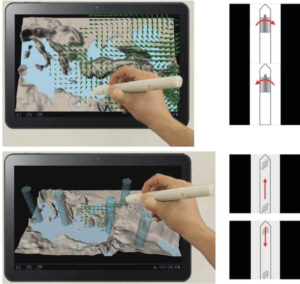

HaptiStylus: A Novel Stylus Capable of Displaying

Movement and Rotational Torque Effects

We describe a novel stylus capable of displaying certain vibrotactile and inertial haptic effects to the user. Oure xperimental results from our interactive pen-based game show that our haptic stylus is

effective in practical settings.

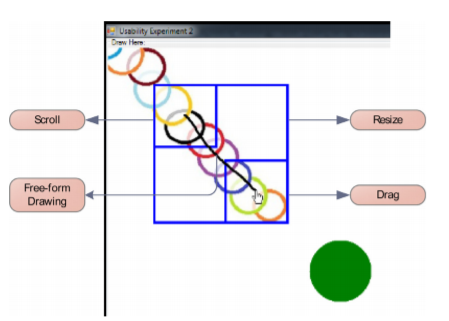

Real-Time Activity Prediction: A Gaze-Based Approach for

Early Recognition of Pen-Based Interaction Tasks

In this paper (1) an existing activity prediction system for pen-based devices is modified for real-time activity prediction and (2) an alternative time-based activity prediction system is introduced. Both systems use eye gaze movements that naturally accompany pen-based user interaction for activity classification.

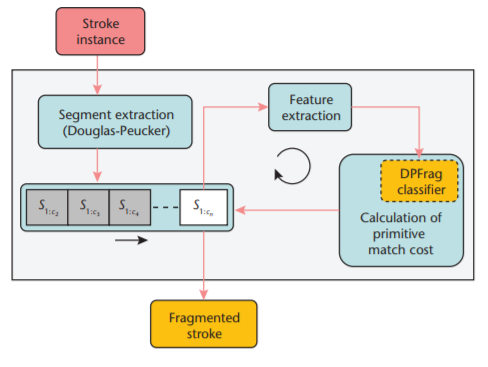

DPFrag: Trainable Stroke Fragmentation Based on Dynamic Programming

DPFrag is an efficient, globally optimal fragmentation method that learns segmentation parameters from data and produces fragmentations by combining primitive recognizers in a dynamic-programming framework. The fragmentation is fast and doesn’t require laborious and tedious parameter tuning. In experiments, it beat state-of-the-art methods on standard databases with only a handful of labeled examples.

Physics Based Game & Level Editor with Sketch Recognition

Pen-based user interfaces facilitate natural and efficient human-computer interaction. In this project, we have combined the familiarity of pen and paper with the computing power of a tablet computer to build an engaging physics-based computer puzzle. This video demonstrates our pen-based level editor, and the interaction paradigm within which users solve physics-based puzzle.