We study how computers can learn, understand, and use human language. Some of the challenges are grounding, ambiguity and the many levels of analysis that language has:

Grounding

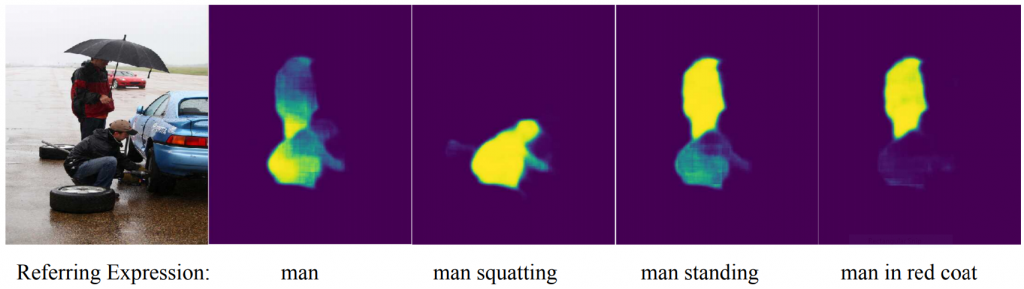

Many successful NLP applications (Siri, Google Translate) work by pattern matching in natural language text. They do not understand words like “red” or “in front of” the way humans do: grounded in sensory-motor experience. We study multi-modal problems connecting language with vision and robotics to reach the next level of understanding. For example our BiLingUNet model learns to point out various objects in a photograph when described using natural language phrases.

Ambiguity

Language is inherently ambiguous: we say very little of what we mean and rely on our shared human context to communicate most of the meaning. For example our Morse morphology model addresses ambiguity in Turkish words and finds the right interpretation for a word like “masalı” in different contexts: “babamın masalı”, “bana masalı anlat”, “mavi masalı oda”. Humans are very good at disambiguating and often we do not even realize that there is an ambiguity. How many different interpretations of the sentence “I saw the man on the hill with a telescope.” can you think of?

Many Levels of Analysis

Understanding language involves analysis in many different levels such as phonology, morphology, syntax, semantics and pragmatics. In 1976 John McCarthy, one of the founders of artificial intelligence, wrote a memo discussing the problem of getting a computer to understand a story from the New York Times:

“A 61-year old furniture salesman, John J. Hug, was pushed down the shaft of a freight elevator yesterday in his downtown Brooklyn store by two robbers while a third attempted to crush him with the elevator car because they were dissatisfied with the $1,200 they had forced him to give them.”

Here are some simple questions anybody who understands this story should be able to answer:

Who was in the store when the events began?

Who had the money at the end?

Did Mr. Hug know he was going to be robbed?

Does he know now that he was robbed?

—

When and where was Mr. Hug shoved?

Who forced who to give $1,200 to whom?

Did the money satisfy the robbers?

The first four questions do not have obvious answers in the text and rely on common sense. Common sense reasoning is still beyond the state of the art in AI and we think that more work on language grounding, described above, is a prerequisite for this level of understanding.

The last three questions are answered directly in the text. Even to answer these simpler questions, we need to address linguistic problems like word sense disambiguation (“push” has 15 senses in WordNet only some of which match “shove”), named entity recognition (Mr. Hug = John J. Hug), anaphora resolution (him = John J. Hug), parsing (who did what to whom?), semantic relation identification (dissatisfied with $1,200 = $1,200 did not satisfy). Our group continues to work actively on many of these problems.