Data Selection for Pre-training and Instruction-tuning of LLMs

There is increasing evidence that choosing the right training data is essential for producing state-of-the-art large language models (LLMs). How can we decide on high-quality training data? Can we possibly select fewer data examples to improve performance and efficiency? In this talk, I will present two recent works on selecting high-quality data in pre-training and instruction tuning. I will first present QuRating, a simple framework for selecting pre-training data that captures the abstract attributes of texts humans intuitively perceive. We demonstrate that using state-of-the-art LLMs (e.g., GPT-3.5-turbo) can discern these qualities in pairwise judgments and emphasize the importance of balancing quality and diversity. We have created QuRatedPajama, a dataset comprising 260 billion tokens with fine-grained quality ratings, and show that sampling according to these ratings improves perplexity and in-context learning. Second, I present LESS, a method that effectively estimates data influences for identifying relevant instruction-tuning data for specific applications (a setting we call “targeted instruction tuning”). LESS is efficient, transferrable (we can use a smaller model for data selection), optimizer-aware (working with Adam), and easy to interpret. We show that training on a LESS-selected 5% of the data can often outperform training on full datasets on diverse downstream tasks.

On Some Statistical and Machine Learning Problems in Research and Engineering

This talk will present a family of statistical (mixture of experts) distributions and ground their approximation and estimation properties related to their capabilities to deal with heterogenous data in high-dimensional and distributed/federated settings. It will also introduce approaches to leverage modern machine (deep) learning approaches with scientific and semantic knowledge to solve physical problems in engineering, including industrial design and supervision.

Feature Learning in Two-layer Neural Networks: The Effect of Data Covariance

We study the effect of gradient-based optimization on feature learning in two-layer neural networks. We consider a setting where the number of samples is of the same order as the input dimension and show that, when the input data is isotropic, gradient descent always improves upon the initial random features model in terms of prediction risk, for a certain class of targets. Further leveraging the practical observation that data often contains additional structure, i.e., the input covariance has non-trivial alignment with the target, we prove that the class of learnable targets can be significantly extended, demonstrating a clear separation between kernel methods and two-layer neural networks in this regime.

Machine Learning for Autonomously Learning Robots

I am driven by the question of how robots can autonomously develop skills to become versatile helpers for humans. Considering children, it seems natural that they have their own agenda. They playfully explore their environment, without the necessity for somebody to tell them exactly what to do next. Replicating such flexible learning in machines is highly challenging. I will present my research on different machine learning methods as steps towards solving this challenge. Part of my research is concerned with artificial intrinsic motivations — their mathematical formulation and embedding into learning systems. Equally important is to learn the right representations and internal models and I will show how powerful intrinsic motivations can be derived from learned models. With model-based reinforcement learning and planning methods, I show how we can achieve active exploration and playful robots but also safety aware behavior. A really fascinating feature is that these learning-by-playing systems are able to perform well in unseen tasks zero-shot.

Growing Adaptive and Self-Assembling Machines

Despite all their recent advances, current AI methods are often still brittle and fail when confronted with unexpected situations. By incorporating collective intelligence ideas, we have recently been able to create neural networks that self-organize their weights for fast adaptation, machines that can recognize their own shape, and machines that self-assemble through local interactions alone. Additionally, in this talk I will present initial results from my GROW-AI ERC project, where we are developing neural networks that grow through a developmental process that mirrors key properties of embryonic development in biological organisms. The talk concludes with future research opportunities and challenges that we need to address to best capitalize on the same ideas that allowed biological intelligence to strive.

Aya: An Open Science Initiative to Accelerate Multilingual AI Progress

Access to cutting-edge breakthroughs in large language models (LLMs) has been limited to speakers of only a few, primarily English, languages. The Aya project aimed to change that by focusing on accelerating multilingual AI through an open-source initiative. This initiative resulted in a state-of-the-art multilingual instruction-tuned model and the largest multilingual instruction collection. Built by 3,000 independent researchers across 119 countries, the Aya collection is the largest of its kind, crafted through templating and translating existing NLP datasets across 114 languages. As part of this collection, the Aya dataset is the largest collection of original annotations from native speakers worldwide, covering 65 languages. Finally, trained on a diverse set of instruction mixtures, including the Aya collection and dataset, the Aya model is a multilingual language model that can follow instructions in 101 languages, achieving state-of-the-art performance in various multilingual benchmarks.

Factuality Challenges in the Era of Large Language Models

We will discuss the risks, the challenges, and the opportunities that Large Language Models (LLMs) bring regarding factuality. We will then delve into our recent work on using LLMs to assist fact-checking (e.g., claim normalization, stance detection, question-guided fact-checking, program-guided reasoning, and synthetic data generation for fake news and propaganda identification), on checking and correcting the output of LLMs, on detecting machine-generated text (blackbox and whitebox), and on fighting the ongoing misinformation pollution with LLMs. Finally, we will discuss work on safeguarding LLMs, and the safety mechanisms we incorporated in Jais-chat, the world’s best open Arabic-centric foundation and instruction-tuned LLM.

Real-Time Digital Twin System in 6G Era

With the tremendous advances in the upcoming 6G era, the world enters an age of connected intelligence. This will enable real-time Digital Twin deployments with specific integrated features such as seamless automation and control, augmented reality/virtual reality, visualization, and more connected devices per square kilometer. This new knowledge-based era of the 6G vision needs seamless control systems, fully automated management, artificial-intelligence enabled communication, well-bred computing methodologies, self-organizing behaviors, and high-end connectivity. Here, the importance of Digital Twins (DT) based systems has become vital. Digital Twin (DT) is the virtual representation of a Cyber-Physical System’s network elements and dynamics. The use of DT provides undue advantages such as resiliency, sustainability, real-time monitoring, control-tower-based management, thorough what-if analyses, an extremely high-performance simulation model for research, testing, and optimization. With these in mind, in this talk, first, a short recap of the ai-enabled digital twin concept and its potential market size in Industry 4.0 will be introduced. The technology behind DT, such as the high precision virtual network modeling and edge intelligence for ultra-low latency, will then be described. The reliability, latency, capacity, and connectivity issues in DT will be discussed. Moreover, several application areas of DT will also be underlined in terms of demand forecasting, warehouse automation, predictive maintenance, anomaly detection, risk assessment, intelligent scheduling, and control tower. Some important implementation areas of DT such as Supply Chain Management, Smart Manufacturing, Sustainable Product Line Management, Healthcare, and Smart Cities will also be covered.

Machine Learning for Life Sciences

In this talk, I will give examples of methods developed in our group at the intersection of machine learning and computational biology. The main body of the talk will focus on our drug synergy prediction efforts. Combination drug therapies are effective treatments for cancer. However, the genetic heterogeneity of the patients and exponentially large space of drug pairings pose significant challenges for finding the right combination for a specific patient. Current in silico prediction methods promise to reduce the vast number of candidate drug combinations for further screening. However, existing powerful methods are trained with cancer cell line gene expression data, which limits their applicability in clinical settings. While synergy measurements on cell lines models are available at large scale, patient-derived samples are too few to train a complex model. On the other hand, patient-specific single-drug response data are relatively more available. In this talk, I will first present our method trained on cell line gene expression data and further describe training strategies for customizing patient drug synergy predictions using single drug response data.

A Light Touch Approach to Teaching Transformers Multi-view Geometry

Transformers are powerful visual learners, in large part due to their conspicuous lack of manually-specified priors. This flexibility can be problematic in tasks that involve multiple-view geometry, due to the near-infinite possible variations in 3D shapes and viewpoints (requiring flexibility), and the precise nature of projective geometry (obeying rigid laws). To resolve this conundrum, we propose a “light touch” approach, guiding visual Transformers to learn multiple-view geometry but allowing them to break free when needed. We achieve this by using epipolar lines to guide the Transformer’s cross-attention maps, penalizing attention values outside the epipolar lines and encouraging higher attention along these lines since they contain geometrically plausible matches. Unlike previous methods, our proposal does not require any camera pose information at test-time. We focus on pose-invariant object instance retrieval, where standard Transformer networks struggle, due to the large differences in viewpoint between query and retrieved images. Experimentally, our method outperforms state-of-the-art approaches at object retrieval, without needing pose information at test-time.

Affective Social Robots and Interaction Studies for Children with Disabilities

I will be summarizing our projects on the affective social humanoid robots for the education and health applications of children with disabilities. We develop affective modules to recognize the behaviours, attention, emotion and stress of children based on the data (physiological, facial, audio, an gaze) collected during their interaction with the robots and the sensory setup. We also work on the affect based serious games and exergames for children.

Topological Deep Learning: A New Hope for AI4Science

Topological deep learning is a rapidly growing field that pertains to the development of deep learning models for data supported on topological domains such as simplicial complexes, cell complexes, and hypergraphs, which generalize many domains encountered in scientific computations. In this talk, Tolga will present a unifying deep learning framework built upon an even richer data structure that includes widely adopted topological domains. Specifically, he will begin by introducing combinatorial complexes, a novel type of topological domain. Combinatorial complexes can be seen as generalizations of graphs that maintain certain desirable properties. Similar to hypergraphs, combinatorial complexes impose no constraints on the set of relations. In addition, combinatorial complexes permit the construction of hierarchical higher-order relations, analogous to those found in simplicial and cell complexes. Thus, combinatorial complexes generalize and combine useful traits of both hypergraphs and cell complexes, which have emerged as two promising abstractions that facilitate the generalization of graph neural networks to topological spaces. Second, building upon combinatorial complexes and their rich combinatorial and algebraic structure, Tolga will develop a general class of message-passing combinatorial complex neural networks (CCNNs), focusing primarily on attention-based CCNNs. He will additionally characterize permutation and orientation equivariances of CCNNs, and discuss pooling and unpooling operations within CCNNs. The performance of CCNNs on tasks related to mesh shape analysis and graph learning will be provided. The experiments demonstrate that CCNNs have competitive performance as compared to state-of-the-art deep learning models specifically tailored to the same tasks. These findings demonstrate the advantages of incorporating higher-order relations into deep learning models and shows great promise for AI4Science.

The rapidly changing technological landscape makes it difficult for people to understand the impacts of these advances and manage their boundaries. One such aspect is protecting user privacy in online social media platforms. People share a wide variety of information on social media, including personal and sensitive information, without understanding the size of their audience which may cause privacy complications. The networked nature of the platforms further exacerbates these complications where the information can be shared without the information owner’s control. People struggle to achieve their intended audience using the privacy settings provided by the platforms. Researching user behaviours in these situations is essential to understanding and helping people protect their privacy. Another domain where technological advances trouble people is the increasing use of artificial intelligence decisions in potentially harmful situations. The automated systems’ reasoning processes still often remain unclear to people interacting with such systems, which may also harm people by making unjust decisions. There are no efficient means for people to challenge automated decisions and obtain proper restitution if necessary. It is imperative that people are given tools to understand and contest these automated decisions easily.

Chains, Trees, and Graphs of Thoughts: Demystifying Structured-Enhanced Prompting

The field of natural language processing has witnessed significant progress in recent years, with a notable focus on improving language models’ performance through innovative prompting techniques. Among these, structure-enhanced prompting has emerged as a promising paradigm, with designs such as Chain-of-Thought (CoT) or Tree of Thoughts (ToT), in which the LLM reasoning is guided by a structure such as a tree. In the first part of the talk, we overview this recent field, focusing on fundamental classes of harnessed structures, the representations of these structures, algorithms executed with these structures, relationships to other parts of the generative AI pipeline such as knowledge bases, and others. Second, we introduce Graph of Thoughts (GoT): a framework that advances prompting capabilities in LLMs beyond those offered by CoT or ToT. The key idea and primary advantage of GoT is the ability to model the information generated by an LLM as an arbitrary graph, where units of information (“LLM thoughts”) are vertices, and edges correspond to dependencies between these vertices. This approach enables combining arbitrary LLM thoughts into synergistic outcomes, distilling the essence of whole networks of thoughts, or enhancing thoughts using feedback loops. We illustrate that GoT offers advantages over state of the art on different tasks such as keyword counting while simultaneously reducing costs. We finalize with outlining research challenges in this fast-growing field.

Modeling 3D Shape and Motion from Video

Computer vision and machine learning methods are getting really good at 3D reconstruction from 2D images. What they are not as good at is understanding, reconstructing, and generating scenes that are in motion. I’ll talk about recent work on new methods and scene representations that reconstruct and generate scenes that unfold over time.

Interactive Robot Perception and Learning for Mobile Manipulation

The long-standing ambition for autonomous, intelligent service robots that are seamlessly integrated into our everyday environments is yet to become a reality. Humans develop comprehension of their embodiments by interpreting their actions within the world and acting reciprocally to perceive it —- the environment affects our actions, and our actions simultaneously affect our environment. Besides great advances in robotics and Artificial Intelligence (AI), e.g., through better hardware designs or algorithms incorporating advances in Deep Learning in robotics, we are still far from achieving robotic embodied intelligence. The challenge of attaining artificial embodied intelligence — intelligence that originates and evolves through an agent’s sensorimotor interaction with its environment — is a topic of substantial scientific investigation and is still an open challenge. In this talk, I will walk you through our recent research works for endowing robots with spatial intelligence through perception and interaction to coordinate and acquire skills that are necessary for their promising real-world applications. In particular, we will see how we can use robotic priors for learning to coordinate mobile manipulation robots, how neural representations can allow for learning policies and safe interactions, and, at the crux, how we can leverage those representations to allow the robot to understand and interact with a scene, or guide it to acquire more “information” while acting in a task-oriented manner.

Beyond Test Accuracies for Studying Deep Neural Networks

Already in 2015, Leon Bottou discussed the prevalence and end of the training/test experimental paradigm in machine learning. The machine learning community has however continued to stick to this paradigm until now (2023), relying almost entirely and exclusively on the test-set accuracy, which is a rough proxy to the true quality of a machine learning system we want to measure. There are however many aspects in building a machine learning system that require more attention. Specifically, I will discuss three such aspects in this talk; (1) model assumption and construction, (2) optimization and (3) inference. For model assumption and construction, I will discuss our recent work on generative multitask learning and incidental correlation in multimodal learning. For optimization, I will talk about how we can systematically study and investigate learning trajectories. Finally for inference, I will lay out two consistencies that must be satisfied by a large-scale language model and demonstrate that most of the language models do not fully satisfy such consistencies.

Finite-Width Neural Networks: A Landscape Complexity Analysis

In this talk, I will present an average-case analysis of finite-width neural networks through permutation symmetry. First, I will give a new scaling law for the critical manifolds of finite-width neural networks derived from counting all partitions due to neuron splitting from an initial set of neurons. Considering the invariance of zero neuron addition, we derive the scaling law of the zero-loss manifolds that is exact for the population loss. In a simplified setting, a factor 2log2 of overparameterization guarantees that the zero-loss manifolds are the most numerous. Our complexity calculations show that the loss landscape of neural networks exhibits extreme non-convexity at the onset of overparameterization, which is tamed gradually with overparameterization, and it effectively vanishes for infinitely wide networks. Finally, based on the theory, we will propose an `Expand-Cluster’ algorithm for model identification in practice.

Images, Language, and Actions: The Three Ingredients of Robot Learning

Most of the major recent breakthroughs in AI have relied on training huge neural networks on huge amounts of data. But what about a breakthrough in real-world robotics? One of the challenges is that physical robotics data is very scarce, and very expensive to collect. To address this, my team and I have been developing very data-efficient methods for robots to learn new tasks through human demonstrations. Using these methods, we are now able to quickly teach robots a range of everyday tasks, such as hammering in a nail, inserting a plug into a socket, and scooping up an object with a spatula. However, even with these efficient methods, providing human demonstrations can be laborious. Therefore, we have also been exploring the use of off-the-shelf neural networks trained on web-scale data, such as OpenAI’s DALL-E and GPT, to act as a robot’s “imagination” or its “internal monologue” when solving new tasks. Through this talk, we will explore the importance of image, language, and action data in robotics, as the three ingredients for scalable robot learning.

Decoupling The Input Graph and The Computational Graph: The Most Important Unsolved Problem in Graph Representation Learning

When deploying graph neural networks, we often make a seemingly innocent assumption: that the input graph we are given is the ground-truth. However, as my talk will unpack, this is often not the case: even when the graphs are perfectly correct, they may be severely suboptimal for completing the task at hand. This will introduce us to a rich and vibrant area of graph rewiring, which is experiencing a renaissance in recent times. I will discuss some of the most representative works, including two of our own contributions (https://arxiv.org/abs/2210.02997, https://arxiv.org/abs/2306.03589), one of which won the Best Paper Award at the Graph Learning Frontiers Workshop at NeurIPS’22.

Knowledge Augmentation for Language Models

Modern language models have the capacity to store and use immense amounts of knowledge about real world. Yet, their knowledge about the world is often incorrect or outdated, motivating ways to augment their knowledge. In this talk, I will present two complementary avenues for knowledge augmentation: (1) a modular, retrieval-based approach which brings in new information at inference time and (2) a parameter updating approach which aims to enable models to internalize new information and make inferences based on it.

Efficient and Robust Learning on Non-Rigid Surfaces and Graphs

In this talk, I will describe several approaches for learning on curved surfaces undergoing non-rigid deformations. First, I will give a brief overview of intrinsic convolution methods, and then present a different approach based on learned diffusion. The key properties of this approach are its robustness to changes in discretization and efficiency in enabling long-range communication, both in terms of memory and time. I will then showcase several applications, ranging from RNA surface segmentation to non-rigid shape correspondence, and present a recent extension for learning on graphs.

AI Driven Molecular Simulations

Accurate simulations of molecules play an important role in material design, drug discovery and industrial chemical processing. In the field of condensed matter physics, molecular simulations enable us to understand critical phenomena. However simulations of molecules at a large scale using conventional differential equation integrators is limited by the long simulation times. For example, at current, we are off my about 2 orders of magnitude in time scale for protein simulations. In this seminar, we will discuss several techniques of using Deep Neural Networks to learn the dynamics of molecules and hence circumvent the need for integrating equations of motions. Deep Learning techniques can generally speed up simulations by 10x to 100x while maintaining accuracies.

“Sketching” into the future of AI

Humans sketch, from historic times in caves, to the time being by scribbling on phones and tablets. As Artificial Intelligence (AI) learns to see and perceive the world around us (aka computer vision), understanding how humans sketch plays an important and fundamental role in casting insights into the human visual system, and in turn informing AI model designs. This talk is all about sketches, summarising over a decade of research from the SketchX Research Lab at the University of Surrey. By the end, it hopes to convey how sketch research can inform the future of AI, both in terms of fundamental theory and applications that could revolutionise the status quo.

Language Models as World Models

The extent to which language modeling induces representations of the world outside text—and the broader question of whether it is possible to learn about meaning from text alone—have remained a subject of ongoing debate across NLP and cognitive sciences. I’ll present two studies from my lab showing that transformer language models encode structured and manipulable models of situations in their hidden representations. I’ll begin by presenting evidence from *semantic probing* indicating that LM representations of entity mentions encode information about entities’ dynamic state, and that these state representations are causally implicated downstream language generation. Despite this, even today’s largest LMs are prone to glaring semantic errors: they hallucinate facts, contradict input text, or even their own previous outputs. Building on our understanding of how LMs build models of entities and events, I’ll present a *representation editing* model called REMEDI that can correct these errors directly in an LM’s representation space, in some cases making it possible to generate output that cannot be produced with a corresponding textual prompt, and to detect incorrect or incoherent output before it is generated.

The Difference between Language and Thought

Today’s large language models (LLMs) routinely generate coherent, grammatical and seemingly meaningful paragraphs of text. This achievement has led to speculation that these models are—or will soon become—“thinking machines”, capable of performing tasks that require abstract knowledge and reasoning. In this talk, I will argue that, when evaluating LLMs, we should distinguish between their formal linguistic competence—knowledge of linguistic rules and patterns—and functional linguistic competence—understanding and using language in the world. This distinction stems from modern neuroscience research, which shows that these skills recruit different mechanisms in the human brain. I will show that, although LLMs are close to mastering formal linguistic competence, they still fail at many functional competence tasks, which require drawing on various non-linguistic cognitive skills. Finally, I will discuss why we humans are so tempted to mistake fluent speech for fluent thought.

Machine Learning for Weather and Climate Prediction

This talk will provide an overview on the state-of-the-art in machine learning in Earth system science. It will outline how conventional weather and climate models and machine learned models will co-exist in the future, and the challenges that need to be addressed when building the best machine learning forecast systems.t behavior in air combat.

Reinforcement Learning for Solving High Complexity Decision-Making Problems

Reinforcement learning (RL) has attracted significant interest in both academia and industry in recent years. The main premise of RL is the ability to control a system efficiently, without requiring any prior knowledge of the dynamics of the system. That being said, using RL as an out of the box approach only works for relatively simple problems with well-defined episodic structures, small number of actions and dense reward signals. On the other hand, many real-world problems possess extremely delayed reward signals, gigantic action spaces and non-episodic dynamics. In this talk, we will show that such high complexity decision making problems can be solved by wrapping RL algorithms with other powerful machine learning techniques, such as curriculum learning, hierarchical decompositions and imitation learning. We will demonstrate the potential of these methods across three different use cases; i) autonomous driving in urban environments, ii) playing real-time strategy games and iii) cloning fighter pilot behavior in air combat.

InterText: Modelling Text as a Living Object in Cross-Document Context

Digital texts are cheap to produce, fast to update, easy to interlink, and there are a lot of them. The ability to aggregate and critically assess information from connected, evolving texts is at the core of most intellectual work – from education to business and policy-making. Yet, humans are not very good at handling large amounts of text. And while modern language models do a good job at finding documents, extracting information from them and generating natural-sounding language, the progress in helping humans read, connect, and make sense of interrelated texts has been very much limited.Funded by the European Research Council, the InterText project brings natural language processing (NLP) forward by developing a general framework for modelling and analysing fine-grained relationships between texts – intertextual relationships. This crucial milestone for AI would allow tracing the origin and evolution of texts and ideas and enable a new generation of AI applications for text work and critical reading. Using scientific peer review as a prototypical model of collaborative knowledge construction anchored in text, this talk will present the foundations of our intertextual approach to NLP, from data modelling and representation learning to task design, practical applications and intricacies of data collection. We will discuss the limitations of the state of the art, report on our latest findings and outline the open challenges on the path towards general-purpose AI for fine-grained cross-document analysis of texts.

DeepSym: An (Almost) End-to-End Symbol Extraction and Rule Learning System for Long-Horizon Robotic Planning

Symbolic planning and reasoning are powerful tools for robots tackling complex tasks. However, the need to manually design the symbols restrict their applicability, especially for robots that are expected to act in open-ended environments. Therefore symbol formation and rule extraction should be considered part of robot learning, which, when done properly, will offer scalability, flexibility, and robustness. Towards this goal, we propose a novel general method that finds action-grounded, discrete object and effect categories and builds probabilistic rules over them for non-trivial action planning.

How to Avoid Pitfalls in Applied Data Science / Machine Learning Projects – Lessons Learnt the Hard Way

The path for a successful applied machine learning project is full of potholes. An ML practitioner will need to fall into these potholes and eventually gain their own experience, derive their own list of lessons-learnt. In this talk, I’ll share my experience in case it might help others avoid some of those pitfalls. Most failed ML projects fail because they attempt to solve a non-important, non-solvable or already solved problem. Taking the time, before writing the first line of code, and working with the stakeholders side-by-side to clarify the business problem, contratints, scope, roles and responsibilities is critical for the success of the project. I’ll talk about how a typical ML project team is structured and works together to deliver impact. During the execution of the project, there are certain best practices that will help the ML practitioner to avoid technical debts. In this talk, I try to define different types and sources of technical debts and some practical tips that could help avoiding or at least minimizing them.

Beyond Wikipedia: Wikimedia Movement and Sister Projects

As internet users, we all are using or being exposed to the content of Wikimedia projects in our daily lives. The content in Wikimedia projects is also useful as a dataset in advancing artificial intelligence research and application. In this talk we will be presenting Wikipedia and its sister projects from an editor-perspective, introduce the global movement behind those projects and give short information about the Lexicographical data project of Wikidata.

Self-Supervised Learning with an Information Maximization Criterion

Self-supervised learning allows AI systems to learn effective representations from large amounts of data using tasks that do not require costly labeling. Mode collapse, i.e., the model producing identical representations for all inputs, is a central problem to many self-supervised learning approaches, making self-supervised tasks, such as matching distorted variants of the inputs, ineffective. In this article, we argue that a straightforward application of information maximization among alternative latent representations of the same input naturally solves the collapse problem and achieves competitive empirical results. We propose a self-supervised learning method, CorInfoMax, that uses a second-order statistics-based mutual information measure that reflects the level of correlation among its arguments. Maximizing this correlative information measure between alternative representations of the same input serves two purposes: (1) it avoids the collapse problem by generating feature vectors with non-degenerate covariances; (2) it establishes relevance among alternative representations by increasing the linear dependence among them. An approximation of the proposed information maximization objective simplifies to a Euclidean distance-based objective function regularized by the log- determinant of the feature covariance matrix. The regularization term acts as a natural barrier against feature space degeneracy. Consequently, beyond avoiding complete output collapse to a single point, the proposed approach also prevents dimensional collapse by encouraging the spread of information across the whole feature space. Numerical experiments demonstrate that CorInfoMax achieves better or competitive performance results relative to the state-of-the-art SSL approaches.

Mining Genomic Resources for Antimicrobial Peptides

The silent pandemic due to superbugs – pathogens resistant to multiple antimicrobial drugs – kills 1.5 million people every year. Threat from superbugs will only grow if the current practice of wide antibiotics use continues, and if we do not develop new alternatives to replace the ineffective drugs on the market. To fight this trend, drug development efforts are increasingly focusing on members of a certain biomolecule family called antimicrobial peptides (AMPs). These biomolecules have evolved together with the bacteria in their environment, and are known not to induce resistance to the same extent the conventional antibiotics do.

AMPs are employed by all classes of life, and their sequences are encoded in the species’ genomes. There is a rich repertoire of genomics data waiting to be mined to discover AMPs. In this presentation, I will describe the sequencing, bioinformatics, and testing technologies required to discover and validate AMPs in high throughput. Special emphasis will be on de novo sequence assembly methods and machine learning models for sequence annotation.

The End of Words? Language Modelling with Pixels

Language models are defined over a finite set of inputs, which creates a bottleneck if we attempt to scale the number of languages supported by a model. Tackling this bottleneck usually results in a trade-off between what can be represented in the embedding matrix and computational issues in the output layer. I will present PIXEL, the Pixel-based Encoder of Language, which suffers from neither of these issues. PIXEL is a pretrained language model that renders text as images, making it possible to transfer representations across languages based on orthographic similarity or the co-activation of pixels. PIXEL is trained on predominantly English data in the Wikipedia and Bookcorpus datasets to reconstruct the pixels of masked patches instead of predicting a probability distribution over tokens. I will present the results of an 86M parameter model on downstream syntactic and semantic tasks in 32 typologically diverse languages across 14 scripts. PIXEL substantially outperforms BERT when the script is not seen in the pretraining data but it lags behind BERT when working with Latin scripts. I will finish by showing that PIXEL is robust to noisy text inputs, further confirming the benefits of modelling language with pixels.

NextG Wireless Systems at the Nexus of ML, Mobile XR, and UAV-IoT

The talk reflects the recent paradigm shift in wireless networks research from the traditional objective of enabling ever higher transmission rates at the physical layer to enabling for the network system higher resilience to attacks, higher robustness to system components’ failures, closer vertical integration with key emerging applications and their quality of experience needs, and intelligent self-coordination. The talk will comprise three stories of related recent research (the number three is good ). I will first talk about multi-connectivity enabled NextG wireless multi-user VR systems. Then, I will outline our advances in domain-aware fast RL for IoT systems. Third, I will talk about enabling real-time human AR streaming in NextG classrooms featuring real and virtual participants. The presentation of each of these studies will comprise a brief outline of the overall NSF project in which they are embedded. Next, I will highlight an interdisciplinary NIH R01 study I lead at the nexus of VR and AI aimed at addressing the societal need of low-vision rehabilitation. Finally, I will leave the floor open for questions and discussions.

What Learning Algorithm Is In-Context Learning? Investigations with Linear Models

Neural sequence models, especially transformers, exhibit a remarkable capacity for in-context learning. They can construct new predictors from sequences of labeled examples (x, f(x)) presented in the input without further parameter updates. We investigate the hypothesis that transformer-based in-context learners implement standard learning algorithms implicitly, by encoding smaller models in their activations, and updating these implicit models as new examples appear in the context. Using linear regression as a prototypical problem, we offer three sources of evidence for this hypothesis. First, we prove by construction that transformers can implement learning algorithms for linear models based on gradient descent and closed-form ridge regression. Second, we show that trained in-context learners closely match the predictors computed by gradient descent, ridge regression, and exact least-squares regression, transitioning between different predictors as transformer depth and dataset noise vary, and converging to Bayesian estimators for large widths and depths. Third, we present preliminary evidence that in-context learners share algorithmic features with these predictors: learners’ late layers non-linearly encode weight vectors and moment matrices. These results suggest that in-context learning is understandable in algorithmic terms, and that (at least in the linear case) learners may rediscover standard estimation algorithms. Code and reference implementations released at this http link.

The City Answers Your Questions: Sensory Intelligence at the Edge

This talk presents SensiX, a multi-tenant runtime for adaptive model execution with integrated MLOps on edge devices, e.g., a camera, a microphone, or IoT sensors. Through its highly modular componentisation to externalise data operations with clear abstractions and document-centric manifestation for system-wide orchestration, SensiX can serve multiple models efficiently with fine-grained control on edge devices while minimising data operation redundancy, managing data and device heterogeneity, reducing resource contention and removing manual MLOps.

A particular deployment of SensiX is an urban conversational agent. Lingo is a hyper-local conversational agent embedded deeply into the urban infrastructure that provides rich, purposeful, detailed, and in some cases, playful information relevant to a neighbourhood. Lingo provides hyper-local responses to user queries. The responses are computed by SensiX to act as an information source. These queries are served through a covert communication mechanism over Wi-Fi management frames to enable privacy-preserving proxemic interactions.

Affective Speech Modeling and Analysis: Robustness, Generalization, Usability

Speech technology has proliferated into our life, and speech emotion recognition (SER) modules add humaine aspect to the wide-spread use of speech based services. Deep learning techniques play a key role in realizing SER for into-life application. In this talk, we will talk briefly about three main components of using deep models for SER: robustness, generalization and usability, and share several of our recent developments in each of the three main components.

Multi-Accelerator Execution of Neural Network Inference on Diversely Heterogeneous SoCs

Computing systems are becoming more complex by integrating specialized processing units, i.e., accelerators, that are optimized to perform a specific type of operation. This demand is fueled by the need to run distinct workloads in mobile and autonomous platforms. Such systems often embed diversely heterogeneous System-on-Chips(SoC) where an operation can be executed by more than a single type of accelerator with varying performance, energy, and latency characteristics. A hybrid (i.e., multi-accelerator) execution of popular workloads, such as neural network (NN) inference, collaboratively and concurrently on different types of accelerators in a diversely heterogeneous SoC is a relatively new and unexplored scheme. Multi-accelerator execution has the potential to provide unique benefits for computing systems with limited resources. In this talk, we investigate a framework that enables resource-constraint aware multi-accelerator execution for diversely heterogeneous SoCs. We achieve this by distributing the layers of a NN inference across different accelerators so that the trade-off between performance and energy satisfies system constraints. We further explore improving total throughput by concurrently using different types of accelerators for executing NNs in parallel. Our proposed methodology uniquely considers inter-accelerator transition costs, shared-memory contention and accelerator architectures that embed internal hardware pipelines. We employ empirical performance models and constraint-based optimization problems to determine optimal multi-accelerator execution schedules.

Imbuing Collaborative Robotic Manipulators with Human-Robot Interaction Skills

Industrial robots, a shining example of the success of robotics in the manufacturing domain, are developed as manipulators without any support for human-robot interaction (HRI). However, a new generation of manipulators, called Collaborative robots (Cobots), designed with embedded safety features, are being deployed to operate alongside humans. These advances are pushing HRI research, most of which is being conducted on “toy robots that do not do much work,” towards deployment on Cobots. In our two TUBITAK projects, called CIRAK and KALFA, we study how Cobots can be imbued with HRI capabilities in a collaborative assembly task. Based on the observation that manipulation skills of Cobots being (and will remain in the near future) inferior to the skill of workers, we envision Cobots positioning themselves as unskilled coworkers (hence the name CIRAK and KALFA) in which they hand in proper tools and parts to the worker. Within this talk, I will summarize our work towards imbuing HRI skills on Cobots through the use of some animation principles through behaviors such as “breathing” and “gazing”, as well as automatic assembly learning. Finally, I will briefly share the developments about METU-ROMER.

Object Manipulation with Physics-Based Models

I will give an overview of our work on robotic object manipulation. First, I will talk about physics-based planning. This refers to robot motion planners that use predictions about the motion of contacted objects. We have particularly been interested in developing such planners for cluttered scenes, where multiple objects might simultaneously move as a result of robot contact. Second, I will talk about a more conventional grasping-based problem, where a robot must manipulate an object for the application of external forceful operations on it. Imagine a robot holding and moving a wooden board for a human, while the human drills holes into the board and cuts parts of it. I will describe our efforts in developing a planner that addresses the geometric, force stability, and human-comfort constraints for such a system.

Enhancing the Creative Process in Digital Prototyping

Despite advances in computer-aided design (CAD) systems and video editing software, digital content creation for design, storytelling, and interactive experiences remains a challenging problem. This talk introduces a series of studies, techniques, and systems along three thrusts that engage creators more directly and enhance the user experience in authoring digital content. First, we present a drawing dataset and spatiotemporal analysis that provide insight into how people draw by comparing tracing, freehand drawing, and computer-generated approximations. We found a high degree of similarity in stroke placement and types of strokes used over time, which informs methods for customized stroke treatment and emulating drawing processes. We also propose a deep learning-based technique for line drawing synthesis from animated 3D models, where our learned style space and optimization-based embedding enable the generation of line drawing animations while allowing interactive user control across frames. Second, we demonstrate the importance of utilizing spatial context in the creative process in augmented reality (AR) through two tablet-based interfaces. DistanciAR enables designers to create site-specific AR experiences for remote environments using LiDAR capture and new authoring modes, such as Dollhouse and Peek. PointShopAR integrates point cloud capture and editing in a single AR workflow to help users quickly prototype design ideas in their spatial context. Our user studies show that LiDAR capture and the point cloud representation in these systems can make rapid AR prototyping more accessible and versatile. Last, we introduce two procedural methods to generate time-based media for visual communication and storytelling. AniCode supports authoring and on-the-fly consumption of personalized animations in a network-free environment via a printed code. CHER-Ob generates video flythroughs for storytelling from annotated heterogeneous 2D and 3D data for cultural heritage. Our user studies show that these methods can benefit the video-oriented digital prototyping experience and facilitate the dissemination of creative and cultural ideas.

Facets of Efficiency in NLP

In recent years model sizes have increased substantially, and so did the cost for training them. This is problematic for two reasons: 1) it excludes organizations that do not have thousands of GPUs at hand for training such models, and 2) it becomes apparent that the hardware will not able to scale along with the growth of the models. Both can be alleviated by improving the efficiency of NLP models. This talk will first provide an overview of where efficiency may be improved within a typical NLP pipeline. We will then have a closer look at methods that improve data efficiency. Finally, we will discuss how we can quantify efficiency using different kinds of metrics.

Computational Imaging: Integrating Physical and Learned Models using Plug-and-Play Methods

Computational imaging is a rapidly growing area that seeks to enhance the capabilities of imaging instruments by viewing imaging as an inverse problem. Plug-and-Play Priors (PnP) is one of the most popular frameworks for solving computational imaging problems through the integration of physical and learned models. PnP leverages high-fidelity physical sensor models and powerful machine learning methods to provide state-of-the-art imaging algorithms. PnP algorithms alternate between minimizing a data-fidelity term to promote data consistency and imposing a learned regularizer in the form of an “artifact-reducing” deep neural network. Recent highly successful applications of PnP algorithms include bio-microscopy, computerized tomography, magnetic resonance imaging, and joint ptycho-tomography. This talk presents a unified and principled review of PnP by tracing its roots, describing its major variations, summarizing main results, and discussing applications in computational imaging.

Advances in Neural Search

The advance of large pre-trained transformer models fundamentally changed Information Retrieval, resulting in substantially better search results without the need of large user-interaction data. In this talk, I will give an overview of different neural search techniques, their advantages and disadvantages, open challenges, and how they can successfully be used to improve any search system.

Explainable AI in Medical Image Analysis: Lessons Learned in Total Joint Arthroplasty (TJA) Research

Orthopedic surgical procedures, and particularly total knee/hip arthroplasty (TKA/THA), are the most common and fastest growing surgeries in the United States. Almost 1.3 million TJA procedures occur on a yearly basis and more than 7 million Americans are currently living with artificial knee and/or hip joints. The widespread adoption of x-ray radiography and their availability at low cost, make them the principal method in assessing TJA and subtle TJA complications, such as osteolysis, implant loosening or infection over time, enabling surgeons to rule out complications and possible needs for revision surgeries. Rapid yet, with the growing number of TJA patients, the routine clinical and radiograph follow-up remain a daunting task for most orthopedic centers. It becomes an overwhelming amount of work, on a human scale, when we consider a radiologist or surgeon presented with the vast number of medical images daily. Smart computational strategies, such as explainable artificial intelligence and deep learning methods are thus required to analyze arthroplasty radiographs automatically and objectively, enabling both naive and experienced practitioners to perform radiographic follow-up with greater ease and speed, providing them with better explainability and interpretability in AI models. In this talk, we will be discussing the effectiveness of explainable AI methods to advance TJA research. We, together, will explore what explainable AI components do in TJA research and how.

The Tip of the Iceberg: How to make ML for Systems work

Machine Learning has become a powerful tool to improve computer systems and there is a significant amount of research ongoing both in academia and industry to tackle systems problems using Machine Learning. Most work focuses on learning patterns and replacing heuristics with these learned patterns to solve systems problems such as compiler optimization, query optimization, failure detection, indexing, caching. However, solutions that truly improve systems need to maintain the efficiency, availability, reliability, and maintainability of systems while integrating Machine Learning into the system. In this talk, I will cover the key aspects and surprising joys of designing, implementing and deploying ML for Systems solutions based on my experiences of building and deploying these systems at Google.

Mirror Neurons from a Developmental Robotics Perspective

Mirror Neurons have been initially discovered in the ventral premotor cortex of macaque monkeys, which seem to represent action and perception in a common framework: they become active when a monkey executes a grasp action, as well as when the monkey observes another monkey or human perform a similar action. The computational modeling of the ‘mirror system’ makes a nice example of how developmental robotics interprets learning, which differs from the current supervised learning systems that can be trained with large, labeled data sets. In developmental robotics, learning data is mostly generated by the learning agent itself and is limited. When external information exists, severe restrictions exist as to what type of data is accessible to the agent. In this talk, I will present a pre-deep learning era mirror neuron modeling, followed by a new model that incorporates the state-of-the art deep neural networks. The latter work indicates that with developmentally valid constraints interesting behaviors may emerge even without feature engineering supporting the hypothesis that mirror neurons develop based on self-observation learning.

Instance Segmentation and 3D Multi-Object Tracking for Autonomous Driving

Autonomous driving promises to change the way we live. It could save lives, provide mobility, reduce wasted time driving, and enable new ways to design our cities. One crucial component in an autonomous driving system is perception, understanding the environment around the car to take proper driving commands. This talk will discuss two perception tasks: instance segmentation and 3D multi-object tracking. In instance segmentation, we discuss different mask representations and propose representing the mask’s boundary as Fourier series. We show that this implicit representation is compact, fast, and gives the highest mAP for a small number of parameters on the dataset MS COCO. Furthermore, during our work on instance segmentation, we found that the Fourier series is linked with the emerging field of implicit neural representations (INR). We show that the general form of the Fourier series is a Fourier mapped perceptron with integer frequencies. As a result, we know that one perceptron is enough to represent any signal if the Fourier mapping matrix has enough frequencies. In 3D MOT, we focus on tracklet management systems, classifying them into count-based and confidence-based systems. We found that the score update functions used previously for confidence-based systems are not optimal. Therefore, we propose better score update functions that give better score estimates. In addition, we used the same technique for the late fusion of object detectors. Finally, we tested our algorithm on the NuScenes and Waymo datasets, giving a consistent AMOTA boost.re given.

Real Artificial Audio Intelligence: Computers Can Also Hear

We already got used to computers somewhat understanding our speech, and we can experience how well computers can already see, for example in autonomous vehicles. But can they also hear as good as we can or beyond? This talk introduces a new perspective on Computer Audition, by dissecting sounds into the individual sound sources and attributing them rich states and traits. Likewise, the sound of a cup put onto a desk becomes the sound sources “cup” and “desk” with attribution such as “the cup is made of china, has a crack, and seems half-full of liquid” or “the desk is made of pinewood and about 3 cm thick”, etc. As modelling such rich descriptions comes at tremendous data cravings, advances in self-supervised learning for audio as well as zero- and few-shot learning concepts are introduced among other data efficiency techniques. The talk shows a couple of first advances. Beyond, applications reaching from saving our health to saving our planet are given.

Turkish Data Depository Project: Towards a Unified Turkish NLP Research Platform

The Turkish language has been left out of the state-of-the-art Natural Language Processing due to a lack of organized research communities. The lack of organized platforms makes it hard for foreign and junior researchers to contribute to Turkish NLP. We present the Turkish Data Depository (tdd.ai) project as a remedy for this. The main goal of TDD subprojects is collecting and organizing Turkish Natural Language Processing (NLP) datasets and providing a research basis for Turkish NLP. In this talk, I will present the results of our ongoing efforts to build TDD. I will go over our recently published user-friendly hub for Turkish NLP datasets (data.tdd.ai). Moreover, I will present our recently accepted ACL’22 paper on Mukayese (mukayese.tdd.ai), a benchmarking platform for various Turkish NLP tools and tasks, ranging from Spell-checking to Natural Language Understanding tasks (NLU).

State of The Art in Deep Learning for Image/Video Super-Resolution

Recent advances in neural architectures and training methods led to significant improvements in the performance of learned image/video restoration and SR. We can consider learned image restoration and SR as learning either a mapping from the space of degraded images to ideal images based on the universal approximation theorem or a generative model that captures the probability distribution of ideal images. An important benefit of data-driven deep learning approach is that neural models can be optimized for any differentiable loss function, including visual perceptual loss functions, leading to perceptual video restoration and SR, which cannot be easily handled by traditional model-based approaches. I will discuss loss functions and evaluation criteria for image/video restoration and SR, including fidelity and perceptual criteria, and the relation between them, where we briefly review the perception vs. fidelity (distortion) trade-off. We then discuss practical problems in applying supervised training to real-life restoration and SR, including overfitting image priors and overfitting the degradation model and some possible ways to deal with these problems.

Prediction of Protein-Protein Interactions Using Deep Learning

Proteins interact through their interfaces to fulfill essential functions in the cell. They bind to their partners in a highly specific manner and form complexes that have a profound effect on understanding the biological pathways they are involved in. Any abnormal interactions may cause diseases. As experimental data accumulates, artificial intelligence (AI) begins to be used and recent groundbreaking applications of AI profoundly impact the structural biology field. In this talk, we will discuss the deep learning methods applied for the prediction of protein-protein interactions and their interfaces.

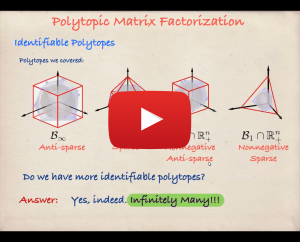

Polytopic Matrix Factorization and Information Maximization Based Unsupervised Learning

We introduce Polytopic Matrix Factorization (PMF) as a flexible unsupervised data decomposition approach. In this new framework, we model input data as unknown linear transformations of some latent vectors drawn from a polytope. The choice of polytope reflects the presumed features of the latent components and their mutual relationships. As the factorization criterion, we propose the determinant maximization (Det-Max) for the sample autocorrelation matrix of the latent vectors. We introduce a sufficient condition for identifiability, which requires that the convex hull of the latent vectors contains the maximum volume inscribed ellipsoid of the polytope with a particular tightness constraint. Based on the Det-Max criterion and the proposed identifiability condition, we show that all polytopes that satisfy a particular symmetry restriction qualify for the PMF framework. Having infinitely many polytope choices provides a form of flexibility in characterizing latent vectors. In particular, it is possible to define latent vectors with heterogeneous features, enabling the assignment of attributes such as nonnegativity and sparsity at the subvector level. We also propose an information-theoretic perspective for the determinant maximization-based matrix factorization frameworks. As further extensions, we will discuss the normative construction of neural networks based on local update rules.

Key Elements of the future legally binding instrument of the Council of Europe (CoE) Ad-Hoc Committee on AI (CAHAI)

The key elements of the CoE AI Convention, which will be the first Convention on AI in the world, have been prepared by an ad-hoc committee called CAHAI, which was established in 2019. CAHAI completed its mission with its last meeting on November 30-December 2, 2021, leaving behind a text detailing with the key elements that the binding international AI convention will come into force. In this event, this text prepared by CAHAI will be discussed with the participants explaining key elements regarding the entire lifecycle of AI.

Improving Human-Robot Conversational Groups

This work aims to improve human-robot conversational groups, in which a robot is situated in an F-formation with humans. With a naive look, each robot consists of input devices e.g., sensors, cameras, etc. logic and decision-making blocks e.g., face detection algorithm, NLP, etc., and output devices e.g., actuators and speakers, etc. These components connect serially. Each component is prone to errors; therefore, each error feeds into the next component and decreases the overall efficiency of the system. For example, if the camera cannot see a person because of being obstructed by an object, then the human detection algorithm cannot detect that person and then the robot won’t consider that person in the interaction. These types of errors decrease the efficiency of the system and also negatively impact human-robot interaction. In this work, we propose four systems that aim to help understand human-robot conversational groups better, reason about them, find the mentioned errors and overcome them. First, we look at the difference between human-human conversational groups and human-robot conversation groups. Second, we propose an algorithm to detect conversational groups (F-formations). Third, we look at how to detect missing people in the conversational groups and validate human-detection algorithms. Last, we propose an algorithm to detect the active speaker based on visual cues and help robots behave normally in conversational groups.

ML Model Optimization with Weights & Biases

We had our very first tutorial talk!

Weights & Biases introduced their platform to monitor training of neural network models.

Two Challenges Behind Visual Dialogues: Grounding Negation and Asking an Informative Question

Visual Dialogues are an intriguing challenge for the Computer Vision and Computational Linguistics communities. They involve both understanding multimodal inputs as well as generating visually grounded questions. We take the GuessWhat?! game as test-bed since it has a simple dialogue structure — Yes-No question answer asymmetric exchanges. We wonder to which extent State-Of-The-Art models take the answers into account and in particular whether they handle positively/negatively answered questions equally well. Moreover, the task is goal oriented: the questioner has to guess the target object in an image. As such it is well suited to study dialogue strategies. SOTA systems are shown to generate questions that, although grammatically correct, often lack an effective strategy and sound unnatural to humans. Inspired by the cognitive literature on information search and cross-situational word learning, we propose Confirm-it, a model based on a beam search re-ranking algorithm that guides an effective goal-oriented strategy by asking questions that confirm the model’s conjecture about the referent. We show that dialogues generated by Confirm-it are more natural and effective than beam search decoding without re-ranking. The work is based on the following publications: Alberto Testoni, Claudio Greco and Raffaella Bernardi Artificial Intelligence models do not ground negation, humans do. GuessWhat?! dialogues as a case study Front.ers in Big Data doi: 10.3389/fdata.2021.736709 Alberto Testoni, Raffaella Bernardi “Looking for Confirmations: An Effective and Human-Like Visual Dialogue Strategy”. In Proceedings of EMNLP 2021 (Short paper).

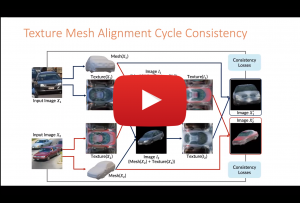

Controllable Image Synthesis

With GAN based models achieving realistic image synthesis on various objects, there has been an increased interest to deploy them for gaming, robotics, architectural designs, and AR/VR applications. However, such applications also require full controllability on the synthesis. To enable controllability, image synthesis has been conditioned on various inputs such as semantic maps, keypoints, and edges to name a few. With these methods, control and manipulation over generated images are still limited. In a new line of research, methods are proposed to learn 3D attributes from images for precise control on the rendering. In this talk, I will cover a range of image synthesis works, starting with conditional image synthesis and continue with 3D attributes learning from single view images for the aim of image synthesis.

An Interdisciplinary Look at Fairness in AI-driven Assistive Technologies: Mapping Value Sensitive Design (Human-Computer Interaction) onto Data Protection Principles

Value sensitive design (VSD) in Human-Computer Interaction is an established method for integrating values into technical design. Design of AI-driven technologies for vulnerable data subjects requires a particular attention to values such as transparency, fairness, and accountability. To achieve this, there is a need for an interdisciplinary look to the fairness principle in data protection law to bridge the gap between what the law requires and what happens in practice. This talk explores the interdisciplinary approach to Fairness in AI-driven Assistive Technologies through mapping VSD onto Data Protection rules.

VALSE : A Task-Independent Benchmark for Vision and Language Models Centered on Linguistic Phenomena

I will talk about a recent collaborative work on VALSE (Vision And Language Structured Evaluation), a novel benchmark designed for testing general-purpose pretrained vision and language (V&L) models for their visio-linguistic grounding capabilities on specific linguistic phenomena. VALSE offers a suite of tests covering various linguistic constructs. Solving these requires models to ground linguistic phenomena in the visual modality, allowing more fine-grained evaluations than hitherto possible. We build VALSE using methods that support the construction of valid foils, and report results from evaluating five widely-used V&L models. Our experiments suggest that current models have considerable difficulty addressing most phenomena. Hence, we expect VALSE to serve as an important benchmark to measure future progress of pretrained V&L models from a linguistic perspective, complementing the canonical task centred V&L evaluations.

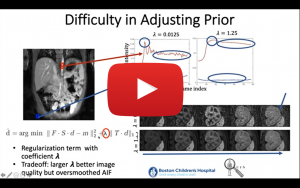

Quantification of Clinically Useful Information from 3D and 4D MR Images: Computational and Deep Learning Techniques for Image Reconstruction, Motion Compensation and Quantitative Parameter Estimation

The talk will focus on the use of medical imaging, computational and deep learning techniques for the discovery and quantification of clinically useful information from 3D and 4D medical images. The talk will describe how computational techniques or deep learning methods can be used for the reconstruction of MR images from undersampled (limited) data for accelerated MR imaging, motion-compensated imaging and robust quantitative parameter estimation and image analysis. It will also show the clinical utility of these proposed techniques for the interpretation of medical images and extraction of important clinical markers in applications such as functional imaging of kidneys and Crohn’s disease.

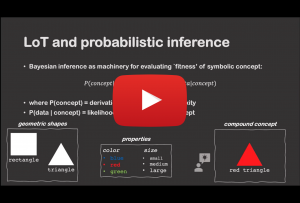

Algorithms of Adaptation in Inductive Inference

We investigate the idea that human concept inference utilizes local incremental search within a compositional mental theory space. To explore this, we study judgments in a challenging task, where participants actively gather evidence about a symbolic rule governing the behavior of a simulated environment. Participants construct mini-experiments before making generalizations and explicit guesses about the hidden rule. They then collect additional evidence themselves (Experiment 1) or observe evidence gathered by someone else (Experiment 2) before revising their own generalizations and guesses. In each case, we focus on the relationship between participants’ initial and revised guesses about the hidden rule concept. We find an order effect whereby revised guesses are anchored to idiosyncratic elements of the earlier guesses. To explain this pattern, we develop a family of process accounts that combine program induction ideas with local (MCMC-like) adaptation mechanisms. A particularly local variant of this adaptive account captures participants’ revisions better than a range of alternatives. We take this as suggestive that people deal with the inherent complexity of concept inference partly through use of local adaptive search in a latent compositional theory space.

Learning Preferences for Interactive Autonomy

In human-robot interaction or more generally multi-agent systems, we often have decentralized agents that need to perform a task together. In such settings, it is crucial to have the ability to anticipate the actions of other agents. Without this ability, the agents are often doomed to perform very poorly. Humans are usually good at this, and it is mostly because we can have good estimates of what other agents are trying to do. We want to give such an ability to robots through reward learning and partner modeling. In this talk, I am going to talk about active learning approaches to this problem and how we can leverage preference data to learn objectives. I am going to show how preferences can help reward learning in the settings where demonstration data may fail, and how partner-modeling enables decentralized agents to cooperate efficiently.

A Novel Haptic Feature Set for the Classification of Interactive Motor Behaviors in Collaborative Object Transfer

Haptics provides a natural and intuitive channel of communication during the interaction of two humans in complex physical tasks, such as joint object transportation. However, despite the utmost importance of touch in physical interactions, the use of haptics is under-represented when developing intelligent systems. This study explores the prominence of haptic data to extract information about underlying interaction patterns within physical human-human interaction (pHHI). We work on a joint object transportation scenario involving two human partners, and show that haptic features, based on force/torque information, suffice to identify human interactive behavior patterns. We categorize the interaction into four discrete behavior classes. These classes describe whether the partners work in harmony or face conflicts while jointly transporting an object through translational or rotational movements. In an experimental study, we collect data from 12 human dyads and verify the salience of haptic features by achieving a correct classification rate over 91% using a Random Forest classifier.

Shifting Paradigms in Multi-Object Tracking

The challenging task of multi-object tracking (MOT) requires simultaneous reasoning about track initialization, identity, and spatiotemporal trajectories. This problem has been traditionally addressed with the tracking-dy-detection paradigm. In this talk, I will discuss more recent paradigms, most notably, tracking-by-regression, and the rise of a new paradigm: tracking-by-attention. In this new paradigm, we formulate MOT as a frame-to-frame set prediction problem and introduce TrackFormer, an end-to-end MOT approach based on an encoder-decoder Transformer architecture. Our model achieves data association between frames via attention by evolving a set of track predictions through a video sequence. The Transformer decoder initializes new tracks from static object queries and autoregressively follows existing tracks in space and time with the new concept of identity preserving track queries. Both decoder query types benefit from self- and encoder-decoder attention on global frame-level features, thereby omitting any additional graph optimization and matching or modeling of motion and appearance. At the end of the talk, I also want to discuss some of our work in collecting data for tracking with data privacy in mind.

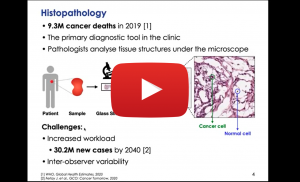

How strong are the weak labels in digital histopathology?

Histopathology is the golden standard in the clinic for cancer diagnosis and treatment planning. Recently, slide scanners have transformed histopathology into digital, where glass slides are digitized and stored as whole-slide-images (WSIs). WSIs provide us with precious data that powerful deep learning models can exploit. However, a WSI is a huge gigapixel image that traditional deep learning models cannot process. Besides, deep learning models require a lot of labeled data. Nevertheless, most WSIs are either unannotated or annotated with some weak labels indicating slide-level properties, like a tumor slide or a normal slide. This seminar will discuss our novel deep learning models tackling huge images and exploiting weak labels to reveal fine-level information within the images. Firstly, we developed a weakly supervised clustering framework. Given only the weak labels of whether an image contains metastases or not, this framework successfully segmented out breast cancer metastases in the lymph node sections. Secondly, we developed a deep learning model predicting tumor purity (percentage of cancer cells within a tissue section) from digital histopathology slides. Our model successfully predicted tumor purity in eight different TCGA cohorts and a local Singapore cohort. The predictions were highly consistent with genomic tumor purity values, which were inferred from genomic data and accepted as accurate for downstream analysis. Furthermore, our model provided tumor purity maps showing the spatial variation of tumor purity within sections, which can help better understand the tumor microenvironment.

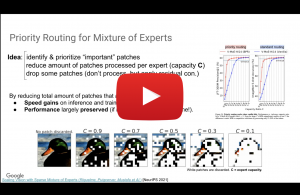

Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity

In deep learning, models typically reuse the same parameters for all inputs. Mixture of Experts (MoE) models defy this and instead select different parameters for each incoming example. The result is a sparsely-activated model – with an outrageous number of parameters – but a constant computational cost. However, despite several notable successes of MoE, widespread adoption has been hindered by complexity, communication costs, and training instability. We address these with the Switch Transformer. We simplify the MoE routing algorithm and design intuitive improved models with reduced communication and computational costs. Our proposed training techniques mitigate the instabilities, and we show large sparse models may be trained, for the first time, with lower precision formats. We design models based off T5-Base and T5-Large (Raffel et al., 2019) to obtain up to 7x increases in pre-training speed with the same computational resources. These improvements extend into multilingual settings where we measure gains over the mT5-Base version across all 101 languages. Finally, we advance the current scale of language models by pre-training up to trillion parameter models on the “Colossal Clean Crawled Corpus”, and achieve a 4x speedup over the T5-XXL model.

Uncovering The Neural Circuitry in The Brain Using Recurrent Neural Networks

The talk is structured in two parts. The first part focuses on the developments in recurrent neural network training algorithms over the years. We first identify the types of recurrent neural networks currently used in neuroscience research based on the training properties and target function. Here, we will discuss the seminal work by Sompolinsky and Crisanti from 1988 regarding chaos in random neural networks, the reservoir computing paradigm, back-propagation through time, and neural activation (not output) based training algorithms. In the second part, we will go through a selection of papers from neuroscience literature using these methods to uncover the neural circuitry in the brain. As machine learning and neuroscience literature have always inspired progress in each other, there is a high chance that some of these biological findings might have direct relevance in artificial neural network research. We will conclude with some candidate ideas.

Challenging the Standards for Socio-Political Event Information Collection

Spatio-temporal distribution of socio-political events sheds light on the causes and effects of government policies and political discourses that resonate in society. Socio-political event data is utilized for national and international policy- and decision-making. Therefore, the reliability and validity of these datasets are of utmost importance. I will present a summary of my studies that examine common assumptions made during creating socio-political event databases such as GDELT and ICEWS. The assumptions I tackled have been 1) keyword filtering is an essential step for determining the documents that should be analyzed further, 2) a news report contains information about a single event, 3) sentences that are self-contained in terms of event information coverage are the majority, and 4) automated tool performance on new data is comparable to the performance on the validation setting. Moreover, I will present how my work brought the computer science and socio-political science communities together to quantify state-of-the-art automated tool performances on event information collection in cross-context and multilingual settings in the context of a shared task and workshop series, which are ProtestNews Lab @ CLEF 2019, COPE @ Euro CSS 2019, AESPEN @ LREC 2020, and CASE @ ACL 2021, I initiated.

Text-Guided Image Manipulation Using GAN Inversion