Arabic-ALBERT

August 26, 2020

Ali Safaya

Koç University, CS – PhD Student

Keywords

Transformers, ALBERT

Acknowledgement

Thanks to Google for providing free TPU for the training process and for Huggingface for hosting these models on their servers

Pretraining data

The models were pretrained on ~4.4 Billion words:

- Arabic version of OSCAR (unshuffled version of the corpus) – filtered from Common Crawl

- Recent dump of Arabic Wikipedia

Notes on training data:

- Our final version of corpus contains some non-Arabic words inlines, which we did not remove from sentences since that would affect some tasks like NER.

- Although non-Arabic characters were lowered as a preprocessing step, since Arabic characters do not have upper or lower case, there is no cased and uncased version of the model.

- The corpus and vocabulary set are not restricted to Modern Standard Arabic, they contain some dialectical Arabic too.

Pretraining details

- These models were trained using Google ALBERT’s github repository on a single TPU v3-8 provided for free from TFRC.

- Our pretraining procedure follows training settings of bert with some changes: trained for 7M training steps with batchsize of 64, instead of 125K with batchsize of 4096.

Models

| albert-base | albert-large | albert-xlarge | |

|---|---|---|---|

| Hidden Layers | 12 | 24 | 24 |

| Attention heads | 12 | 16 | 32 |

| Hidden size | 768 | 1024 | 2048 |

Results

Note: More results on other downstream NLP tasks will be added soon. if you use any of these models, we would appreciate your feedback.

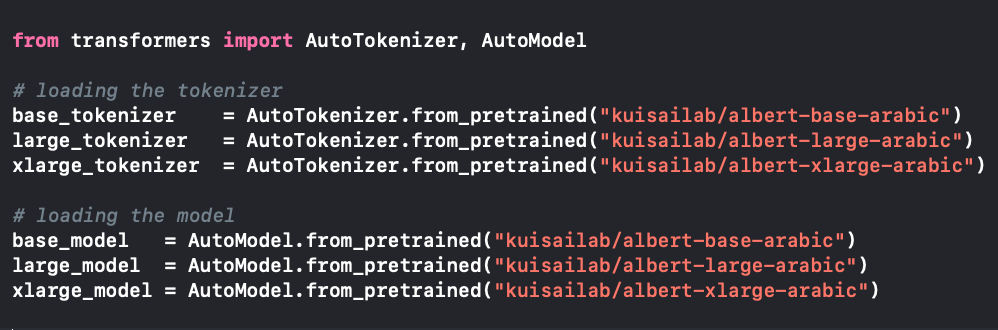

How to use

You can use these models by installing torch or tensorflow and Huggingface library transformers. And you can use it directly by initializing it like this: