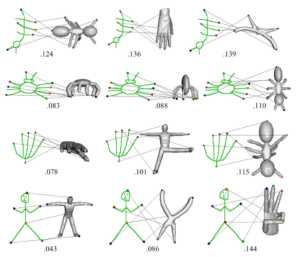

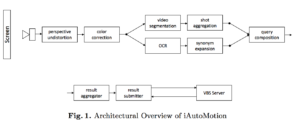

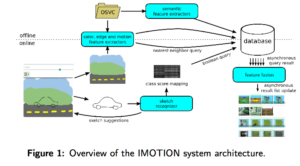

IMOTION – Searching for Video Sequences

Using Multi-Shot Sketch Queries

This paper presents the second version of the IMOTION system, a sketch-based video retrieval engine supporting multiple query

paradigms. For the second version, the functionality and the usability of the system have been improved.

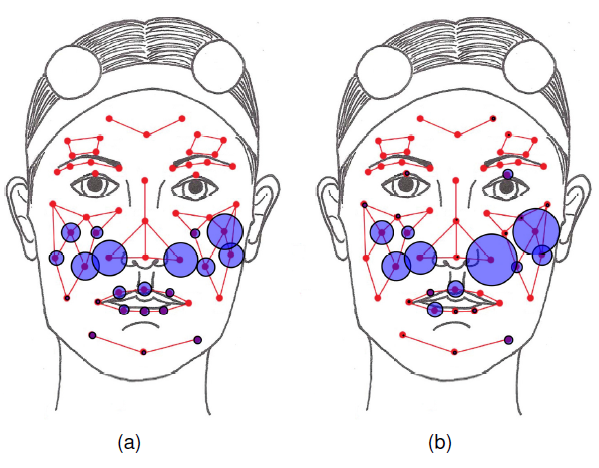

Real-Time Activity Prediction: A Gaze-Based Approach for

Early Recognition of Pen-Based Interaction Tasks

In this paper (1) an existing activity prediction system for pen-based devices is modified for real-time activity prediction and (2) an alternative time-based activity prediction system is introduced. Both systems use eye gaze movements that naturally accompany pen-based user interaction for activity classification.

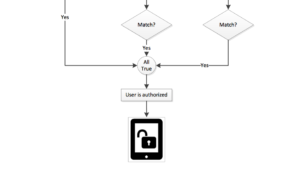

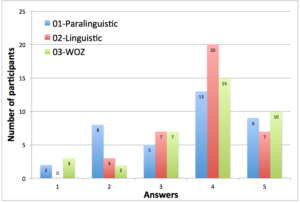

Multimodal Data Collection of Human-RobotHumorous Interactions in the JOKER Project

This paper presents a data collection of social interaction dialogs involving humor between a human participant and a robot. In this work, interaction scenarios have been designed in order to study social markers such as laughter.